How To Change Where I'm Registered To Vote

Databases don't be in isolation. Databases live in an ecosystem of software components: caches, search, dashboards, analytics, other databases, information lakes, web apps, and more. Your Postgres database partners with all these components to deliver the unique value of your awarding.

How does your ecosystem stay in sync? How do those other components get informed almost changes in Postgres? Change data capture or CDC refers to any solution that identifies new or modified information.

I solution: Maybe yous add a timestamp column to your PostgreSQL tables to record alter times. Periodically you lot run a query to pull all the new data—that timestamp cavalcade will help you to identify what'south new since your last pull. Information technology can be a workable solution if yous're okay with that schema change and batched updates—only identifying what's new on some periodic basis. You accept to determine an appropriate batching frequency. Too often may tie up CPU; too infrequent and you'll fall behind on updates.

Real-time data updates let you to go along disparate information systems in continuous sync and to reply quickly to new information. Like being able to testify an online shopper recommendations that are based on items they've placed in their cart so far. Or, for a banking company, being able to ship customers a notification when there's an unusual transaction on their credit carte du jour. Not to mention being able to apply the customer's response to adapt whether similar subsequent payments become through.

That'due south where transaction log-based change data capture comes in. The transaction log naturally keeps track of each data change equally it happens. You but demand a way to read the log.

Logical decoding is the official name of PostgreSQL's log-based change information capture feature. If the term logical decoding sounds unfamiliar, you may have heard of wal2json instead. Wal2json is a popular output plugin for logical decoding. People often utilise 'wal2json' to refer to 'wal2json + logical decoding'. (FYI, Azure Database for PostgreSQL, our managed database service for Postgres, supports logical decoding and wal2json).

Let'south dive into this powerful Postgres characteristic.

Question: What is "logical decoding" decoding?

Answer: The WAL – PostgreSQL's write ahead log.

The WAL (or transaction log) keeps track of all committed data transactions: It is the authority on everything that has happened on your Postgres instance. Its primary purpose is to help your Postgres database recover its land in the effect of a crash. The WAL is written in a special format that Postgres understands because Postgres is its first and primary customer.

But such a log of all database changes is a handy way for other interested parties to sympathize what has happened on the database. And not only what happened, but in what order.

So, in that location'south this treasure trove of data changes in Postgres (the WAL) but information technology's written in a format your non-Postgres services won't understand. Enter logical decoding: the mode Postgres enables you lot to translate (decode!) and emit the WAL into a form you tin can apply.

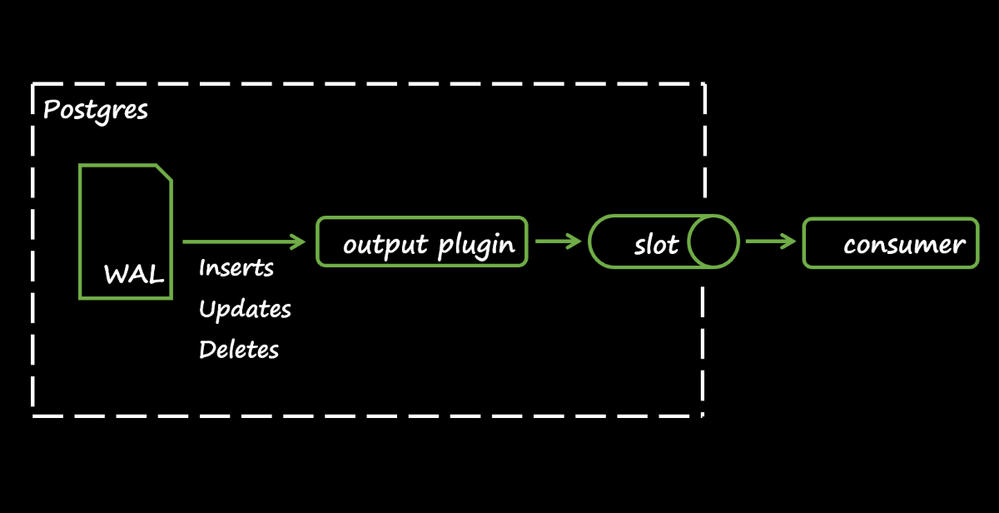

Here's what the logical decoding process looks like:

When a row is changed in a Postgres table, that change is recorded in the WAL. If logical decoding is enabled, the record of that change is passed to the output plugin. The output plugin changes that tape from the WAL format to the plugin's format (east.g. a JSON object). And then the reformatted change exits Postgres via a replication slot. Finally, there's the consumer. A consumer is any application of your choice that connects to Postgres and receives the logical decoding output.

To stream logical decoding, three Postgres parameters demand to be set in postgresql.conf:

wal_level = logical max_replication_slots = ten max_wal_senders = 10 Setting wal_level to logical allows the WAL to record information needed for logical decoding. max_replication_slots and max_wal_senders must be at to the lowest degree 1 or higher if your server may be using more replication connections.

Then your Postgres server needs to be restarted to apply the changes.

I'm using an Azure Database for PostgreSQL server. In Azure, instead of individually setting those three parameters, you can set azure.replication_support:

az postgres server configuration set --resource-group rgroup --server-proper noun pgserver --proper name azure.replication_support --value logical and restart the server:

az postgres server restart --resource-grouping rgroup --name pgserver For our instance we'll be using wal2json equally the output plugin. WAL to JSON. As you lot might accept guessed, this output plugin converts the Postgres write-ahead log output into JSON objects. wal2json is an open source projection that you tin download and install to your local Postgres setup. Information technology is already installed on Azure Database for Postgres servers.

We need a slot and a consumer. pg_recvlogical is a Postgres application that can manage slots and consume the stream from them. pg_recvlogical is included in the Postgres distribution. That means if you've ever installed Postgres on your laptop, you probably have pg_recvlogical.

To ostend you accept pg_recvlogical, you can open a last and run

pg_recvlogical --version Now connect to your Postgres database with pg_recvlogical and create a slot. Connect with a user that has replication permissions.

pg_recvlogical -h pgserver.postgres.database.azure.com -U rachel@pgserver -d postgres --slot logical_slot --create-slot -P wal2json So kickoff the slot.

pg_recvlogical -h pgserver.postgres.database.azure.com -U rachel@pgserver -d postgres --slot logical_slot --start -o pretty-impress=1 -f – This terminal won't return control to y'all – it is waiting to receive the logical decoding stream.

Then we should requite the slot something to stream by making data changes in Postgres. To do that, connect to the Postgres database like you ordinarily do. I'k using psql (in a unlike terminal). Create a table and change some rows.

CREATE TABLE inventory (id SERIAL, item VARCHAR(30), qty INT, Primary Fundamental(id)); INSERT INTO inventory (particular, qty) VALUES ('apples', '100'); UPDATE inventory Ready qty = 96 WHERE particular = 'apples'; DELETE FROM inventory WHERE detail = 'apples'; You lot tin encounter the logical decoding output in the pg_recvlogical terminal. The format of the output is determined by wal2json, the output plugin we selected.

{ "modify": [ ] } { "modify": [ { "kind": "insert", "schema": "public", "table": "inventory", "columnnames": ["id", "detail", "qty"], "columntypes": ["integer", "character varying(30)", "integer"], "columnvalues": [1, "apples", 100] } ] } { "change": [ { "kind": "update", "schema": "public", "table": "inventory", "columnnames": ["id", "particular", "qty"], "columntypes": ["integer", "character varying(thirty)", "integer"], "columnvalues": [1, "apples", 96], "oldkeys": { "keynames": ["id"], "keytypes": ["integer"], "keyvalues": [i] } } ] } { "change": [ { "kind": "delete", "schema": "public", "tabular array": "inventory", "oldkeys": { "keynames": ["id"], "keytypes": ["integer"], "keyvalues": [1] } } ] } To drib the replication slot,

pg_recvlogical -h pgserver.postgres.database.azure.com -U rachel@pgserver -d postgres --slot logical_slot --drop-slot Logical decoding can but output information nigh DML (information manipulation) events in Postgres, that is INSERT, UPDATE, and DELETE. DDL (data definition) changes like CREATE TABLE, ALTER ROLE, and Drib INDEX are not emitted by logical decoding. And neither is any command that's not an INSERT, UPDATE, or DELETE. Remember when we ran CREATE Table in our logical decoding test above? The output was bare:

{ "change": [ ] } For INSERT and UPDATE, a new row is added to a table. This new row data is always sent to the output plugin.

For UPDATE and DELETE, a row is removed from a tabular array. Whether that old row's information gets sent to the output plugin depends on a Postgres table property called REPLICA IDENTITY. Past default, only the main cardinal values of the former row are sent from the WAL.

As an example, let's look at the update we did before.

UPDATE inventory Prepare qty = 96 WHERE item = 'apples'; The logical decoding output was:

{ "modify": [ { "kind": "update", "schema": "public", "table": "inventory", "columnnames": ["id", "detail", "qty"], "columntypes": ["integer", "character varying(xxx)", "integer"], "columnvalues": [1, "apples", 96], "oldkeys": { "keynames": ["id"], "keytypes": ["integer"], "keyvalues": [one] } } ] } Y'all can run across that we get all the new row's data, [1, "apples", 96]. For old data, we merely get the primary fundamental cavalcade, id.

REPLICA IDENTITY has other settings that vary the data you can get virtually updated and deleted rows.

We've already talked about 1 popular output plugin: wal2json. There are other output plugins to choose from. If you are cocky-hosting Postgres, you could even make your ain.

Two output plugins ship natively with Postgres (no additional installation needed):

- test_decoding: Available on Postgres 9.four+. Though created to be just an case of an output plugin, test_decoding is however useful if your consumer supports it (e.thousand. Qlik replicate).

- pgoutput: Available since Postgres 10. pgoutput is used by Postgres to support logical replication, and is supported by some consumers for decoding (due east.g. Debezium).

An output plugins receives information from the WAL. The plugin so decides what information to keep and how to present that information to you.

For example, new row data is e'er sent from the WAL to the output plugin. However, wal2json chooses not to output new row data for an UPDATE if the tabular array has no primary primal. test_decoding, on the other hand, will publish that row. But test_decoding is not JSON formatted with name/value pairs. Pick the output plugin that suits your scenario.

Logical decoding outputs data changes as a stream. That stream is called a logical replication slot.

Yous should continue in heed the following when dealing with slots:

- Each slot has ane output plugin (you choose which).

- Each slot provides changes from only one database.

- But a single database can accept multiple slots.

- Each data change is normally emitted once per slot.

- However, if the Postgres instance restarts, a slot may re-emit changes. Your consumer needs to handle that situation.

- An unconsumed slot is a threat to your Postgres instance'south availability.

Stiff words, yeah, but information technology's true. Information technology is critical that y'all monitor your slots. If a slot'south stream isn't being consumed, Postgres will hold on to all the WAL files for those unconsumed changes. This can lead to storage full or transaction ID wraparound.

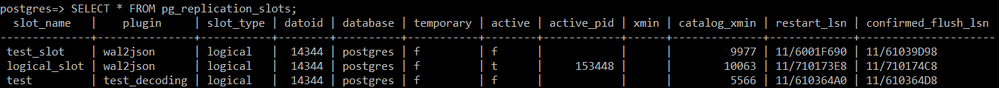

Postgres has a table chosen pg_replication_slots that tracks the state of all replication slots. Keep your eye on the 'agile' column. If a slot does not have a connection to a consumer, the cavalcade will be fake.

Some other way to sentinel for the bear upon of an inactive slot is to take storage alerts configured. If storage is growing and yous don't know why, it may be due to WAL file retention by an unconsumed slot.

Finally, the consumer you choose may come with built-in monitoring then look for that feature.

You are better off deleting an unused slot than keeping it around.

Recall that a consumer is any application that can connect to Postgres and ingest the logical decoding stream. We used pg_recvlogical every bit the consumer in our example earlier.

You lot can create your ain consumer, an app that parses and redirects the Postgres logical decoding stream to other components in your arrangement. For example, in this PGConf.EU presentation Webedia uses a custom service called walparser to catechumen wal2json'southward output into MQ letters, so sends the messages to RabbitMQ and ElasticSearch. Another instance is Netflix's DBLog.

Or, instead of making your own consumer, you can let someone else do the heavy lifting. There are modify data capture connectors available that support Postgres logical decoding as a source and provide connections to diverse targets.

If you are looking for an open-source offering, Debezium is a pop change information capture solution built on Apache Kafka. Learn more about Debezium from their FAQ or this deep dive into change information capture patterns.

You could likewise explore paid services like Striim and Qlik Replicate. Ane reward of all three consumers, compared to creating your own solution, is they support a variety of other sources like MySQL, Oracle, and SQL Server. If you have information in other database engines, yous tin can utilize their connectors to integrate data instead of building a custom connector for each one.

Logical decoding in PostgreSQL provides an efficient style for your other app components to stay up-to-engagement with data changes in your Postgres database. Write once to the reliable Postgres log, then derive those modify events for downstream targets like caches and search indexes. Instead of a pull model where each component queries Postgres at some interval, this is a button model where Postgres notifies you and your awarding of each change, every bit it happens. With logical decoding your Postgres database becomes a centerpiece of your dynamic real-time awarding.

Source: https://techcommunity.microsoft.com/t5/azure-database-for-postgresql/change-data-capture-in-postgres-how-to-use-logical-decoding-and/ba-p/1396421

Posted by: williamsbour1950.blogspot.com

0 Response to "How To Change Where I'm Registered To Vote"

Post a Comment